I received lots of great questions on the “How We Build Features” post that I decided to do a follow-on post-Q&A-style:

Features versus Bugs and the 80/20 Rule

Chris Cornutt: I’d love to see an expanded version of the “Features vs Bugs” section. I’m a developer and it’s too easy to get caught up in the weeds of the code and miss what should be considered a “feature”.

For me, the features versus bugs distinction are pretty simple. Bugs identify some defect in code, while features identify new functionality, which could, in turn, be a small enhancement or a big minimal marketable feature (MMF). Bugs and small features get put on our task board and pulled from there (unless it’s an emergency). Larger features get tracked through the 4-stage Kanban board.

The more interesting distinction for me is between building new features and improving existing features. When you first launch a product, many things can and do go wrong. Many of these may not be directly attributable to defects but rather shortcomings in design (usability), positioning, or something else.

Lindsay Brechler: Do you have a target ratio for bug fixes-to-features in development?

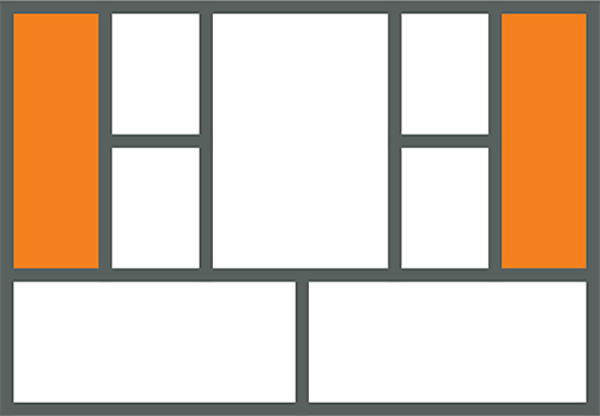

While we don’t have a target bugs-to-feature ratio, we do try and follow an 80/20 rule that places more emphasis on feature improvement over feature building:

This rule applies when your MVP results from dozens of problem and solution interviews. While it may be easy to rationalize a change under the guise of a “pivot,” it’s critical NOT to give up too early on your MVP.

Process Tradeoffs

A.T. and Eric Katerman: Do you follow this process for every feature? Aren’t some features just obvious?

You need to be results and not process oriented.

We only follow the full-blown (4-stage Kanban) process for minimal marketable features (MMFs). Like your MVP, new MMFs are plagued with uncertainty — they are experiments. We follow this process to de-risk these big features in stages. The goal is waste reduction, by which I specifically mean building a better product using less time, money, and effort.

Whenever this isn’t true, i.e., you think you understand the problem and solution well enough and can build a complete feature (or an entire MVP) with less effort (over the alternative), skip the pre-build interviews, build it, and then measure the results with customers through a combo Problem/Solution interview.

Part of becoming a good practitioner is honing your judgment. Even if you grossly underestimate the effort, hidden costs, or impact of a particular feature, you should hopefully learn from the iteration and get better over time — provided you don’t skip measuring your results.

Wes Winham: How do you combat the added latency that waiting on qualitative validation gives?

I’ve found pre-build customer validation always to be worth the effort. At a minimum, it improves the solution (serving as a usability test). In several cases, it has prevented us from wasting weeks of effort building a “nice-to-have” feature.

Here’s an example: We recently mocked up some new timelines and versioning-oriented flows for Lean Canvas. Building all this functionality could easily have taken us weeks, and we hadn’t completely worked out how it would all fit together for ourselves. So we started with some “real-data” mockups, which took us a couple of days to build.

During this time, we went through half a dozen rapid iterations internally before showing the results to customers. The mockups helped us articulate both the problem we were solving and demonstrate how we intended to solve it. We talked to 10 customers, and while they identified strongly with the problem, almost all of them found the same parts of the solution “visually impressive” but lacking in addressing their immediate needs.

Here is one of those mockups:

This feedback helped us reduce the scope and prioritize our focus around features that promised the biggest potential impact — all without writing a single line of code.

Getting Customer Feedback

Wes Winham: How do you handle scheduling of qualitative review sessions? Do you find that it’s hard/easy to get 10 minutes of peoples’ times to talk about features? What about features that you’ve seen coming up in the sales/cust-dev cycle that aren’t tied to any specific current customers?

We do most of our review sessions over a 30 min skype call using video and screen sharing. Whenever it makes sense, we’ll also do face-to-face interviews. The key to getting customers to take the call is the same as getting early customer interviews for your MVP — you have to frame the conversation around a problem they care about. We are not showing them a feature but a problem of theirs we’re solving. This applies to both customer-initiated feature requests and internal ones.

Henri Liljeroos: Do you have a certain pool of users you use for interviews in stages 1 and 2 (Understand Problem and Define Solution)? I find it a bit troublesome to gather a group of users for every single feature. How do you do this in practice?

The easiest way to get a motivated customer to talk to you is to ask for additional information on a feature they requested (customer pull). We frame the initial call around understanding the underlying problem and, during the call, ask for permission to follow up with an early mockup and/or push the feature to them when it’s ready.

Beyond that, I do use a pool of customers who are actively using the product and motivated to help. Customer Development is a great way to establish these relationships organically. By the time you’ve gone through enough problem and solution interviews, you should have a core group of early adopters that are almost as invested in your product as you are.

Ricardo Trindade: In my case, my site uses a freemium model and I struggle between finding the correct balance between features for my customers (the ones who pay) and the users.

If you have paying users, i.e., customers, I’d only listen to them and first understand how they use your product and how they are different from your non-paying users. Then, I’d use that knowledge to model my “free” account for optimal conversion.

Killing Features

Lindsay Brechler: What do you do if you kill the feature — either before development or during quantitative analysis? Do you notify the customer that requested (or is now using) the feature?

Yes, in both cases. The first is easier. If while building the feature, we deem it “not worth building” (we can kill a feature at any stage), we stop, explain our reasons why, and suggest other possible solutions to the problem.

We did this recently with Lean Canvas when we removed some early work on “interview results tracking.” We realized that this was too big a problem to tackle for an activity that represented a small sliver in a startup’s lifecycle. We also had been using some good enough “cobbled-up” alternative solutions. So we informed our user base we weren’t building this feature anymore, explained our reasons why, and told them how we were solving the problem ourselves.

Ricardo Trindade: Isn’t it “wrong” to release a user feature and then simply remove it when your testing shows it isn’t improving any metrics? Won’t the users feel they are being cheated, even though they aren’t spending a single penny for that feature?

It depends on how it’s done. We typically don’t make any marketing announcements (e.g., public blog posts) until the feature provides some value to enough customers. Partial rollouts, customer interviews, etc., help lower the risk along the way.

But in the case where a feature does get rolled out to everyone and still doesn’t move the needle, I wouldn’t hesitate to remove it, provided we shared our reasons.

The Unasked Question

I was surprised no one asked for details on how we measure the quantitative impact of features. Specifically, how we isolate the impact of individual features and track their effects over time. I think it’s because people assume metrics are the answer. But “traditional” metrics alone are not enough.

It’s time to say hello to the cohort (again). I’ll have a lot more to say on that shortly.