The Missing Step Before Build/Measure/Learn

Lean Thinking, which heavily influenced the Lean Startup, has a deliberate step before the Build step. That step is Planning. In our exuberance for action, we often don’t spend the requisite time planning our experiments. This is a mistake and often leads to post-experiment rationalization and sub-optimal results.

Like everything else, planning does need to be time-boxed to avoid analysis paralysis and force action. But time spent on the right kind of planning is time well spent.

What follows are my ground rules for running good experiments. But before we start, let’s get something else out of the way.

Cognitive Biases

Cognitive biases affect our decision-making ability, and even celebrated scientists like Isaac Newton weren’t immune to these biases. For this reason, the scientific community has built several procedures and safeguards for how empirical research is conducted and evidence is gathered.

During clinical trials for medicinal drug testing, for instance, we go to great lengths to set up double-blind tests where information about the test is kept from both the testers and the subjects until after the test.

“The first principle is that you must not fool yourself and you are the easiest person to fool.”

- Richard P. Feynman

However, conducting entrepreneurial inquiry at this level of rigor can be overkill in many instances. Entrepreneurship isn’t knowledge acquisition for learning's sake but for driving results. We aim to quickly latch on to the right signal in the noise and then double down on the signal.

Testing a few gut-based shortcuts (or hunches) along the way is sometimes the fastest way to find these signals in the noise. The best antidote isn’t avoiding these biases but rather internalizing ground rules that counter-act them.

A condensed list of seven habits that help you do this follows.

1. Declare your expected outcomes upfront

Much like a scientist doesn’t simply go into the lab and start mixing a bunch of compounds to see what happens, you need to declare the outcomes of your experiments up front.

“If you simply plan on seeing what happens you will always succeed at seeing what happens because something is guaranteed to happen.”

- Eric Ries, The Lean Startup

Coupling this statement with entrepreneurs being especially gifted at post-rationalizing anything, you can see why this hindsight bias should be avoided. It only delays the confrontation of brutal facts in your current reality.

I realize this is more easily said than done, and there are usually two deeper reasons for not wanting to declare outcomes upfront:

i. People hate to be proven wrong, and

ii. They feel they don’t have enough information.

The next two habits overcome these objections.

2. Make declaring outcomes a team sport

Suppose you are the founder or CEO of the company. In that case, you might shy away from making bold public declarations of expected outcomes because you want to appear knowledgeable and in control. You don’t even have to be the CEO. If you are a designer proposing a new design, it’s much safer to be vague on results than to declare a specific lift in conversion rates for fear of being proven wrong.

Most people shy away from upfront declarations because we attach our egos to our work. While egos are good for reinforcing ownership, they are bad for empirical learning.

Again not as easy to do but here’s how I propose you get started. Don’t place the burden of declaring expected outcomes on a single person. Instead, make it a team effort, but with a twist.

Seeking team consensus too early can lead to groupthink. Expected outcomes declarations are particularly vulnerable to being influenced by the HiPPOs in the room.

HiPPOs is a term used at Amazon which stands for the highest paid person’s opinion.

It’s much better to have team members declare outcomes individually and then compare notes. I recommend having a similar discussion with the actual results. If you want a little fun, you can turn this exercise into a game where you award a small token prize to the person with the closest estimate.

The point isn’t about being right or wrong but getting your team comfortable declaring expected outcomes. This exercise alone can dramatically help in improving your team’s judgment over time.

If you are a solo founder, writing down your expected outcomes is even more important before running experiments.

3. Emphasize estimation, not precision

People also shy away from declaring expected outcomes upfront because they don’t have enough information to make meaningful predictions. How can you predict an expected download rate if you’ve never launched an iPhone app before?

You need to accept the fact that you will never have perfect information AND that you need to make these kinds of predictions any way.

Here are three ways to do this:

i. Search for analogs.

ii. Use your Lean Canvas, traction model, and customer factory dashboard.

iii. Start with ranges instead of absolute predictions

4. Measure actions versus words

Learning experiments, like problem interviews, can be a bit more challenging because qualitative learning can be subjective. Ask any entrepreneur how a customer call went, and it’s usually all positive. Rather than trying to qualitatively gauge what users say or score parts of the conversation, measure what they do (or did).

Deconstruct the Problem and Solution Interviews in Running Lean; you’ll see they have multiple calls to action. While the stuff in between is how I generate new hypotheses (guesses), the call to action lends confidence to these guesses.

5. Turn your assumptions into falsifiable hypotheses

Next, it’s not enough to declare outcomes upfront. You have to make them falsifiable or capable of being proven wrong. It is extremely difficult to invalidate a vague theory. Most assumptions on your Lean Canvas don’t start as falsifiable hypotheses but as leaps of faith.

For example:

I believe that is considered an expert will drive early adopters to my product.

To turn leaps of faith into falsifiable hypotheses, you need to rewrite them as:

[Specific testable action] will drive [Expected measurable outcome].

Turning the statement above into something like:

Writing a blog post will drive > 100 sign-ups

There’s still something missing in the expected outcome statement above.

Can you figure out what it is?

.

.

6. Time-box your experiments

Say you run the experiment and decide to check back in a week. After a week, you have 20 sign-ups. You might decide it’s a good start and leave the experiment for another week. Now you have 50 sign-ups at the halfway point of your desired goal of 100. What should you do?

Being overly optimistic, entrepreneurs commonly fall into the trap of running the experiment “just a little while longer” to get better results. The problem is that those weeks easily turn into months when left unchecked.

Remember that time — not money nor people is the scarcest resource we have. The solution is time-boxing your experiment. We can then rewrite the expected outcome as follows:

Writing a blog post will drive > 100 sign-ups in 2 weeks.

These numbers are time-boxes that aren’t pulled out of thin air. They come from your traction model.

===

Side note:

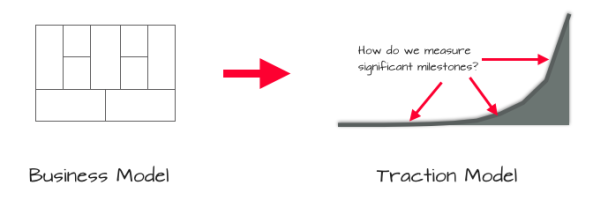

While your Lean Canvas tells your business model story, your traction model describes the desired output of this story. Like the Lean Canvas, it’s about being concise, which in this context is measuring the output of your business model using just a handful of metrics — versus drowning in a sea of numbers.

In other words:

The traction model is to the financial model,

what the Lean Canvas is to the business plan.

I’ll have more to share on creating a traction model in future posts.

===

7. Always use a control group

To tell if an experiment is working, you need to be able to benchmark it against a previous state. The equivalent in science would be establishing a control group. Your daily, monthly, and weekly metrics are a reasonable starting point. These time-based batches create a benchmark you should aim to beat in your experiments.

This is a kind of serial split testing, and it’s usually acceptable when you either don’t yet have a lot of users or aren’t running simultaneous overlapping experiments.

If this isn’t the case, the gold standard for creating a control group is through parallel split testing. In parallel split testing, you only expose a select sub-group of your user population to an experiment which is then compared against the rest of the population (control group) to determine progress (or not). This is also called an A/B test.

Finally, if you have enough traffic to test with and more than one possible conflicting solution, you can run an A/B/C (or more) test where you pit multiple strategies against each other.

How Do You Remember All This?

Like the 1-page strategy proposal, there is also a 1-page experiment report. Ideas are first captured and shared using Strategy Proposals. Selected ideas are then tested using one or more experiments described in the Experiment Report. Instead of memorizing these seven habits, the experiment report incorporates them and functions like a checklist and an idea-sharing tool.

Unlike the Strategy Proposal, you don’t fill this report all at once but in stages — following the build-measure-learn-cycle of experiments. In future posts, I’ll share how we put the Lean Canvas, Strategy Proposal, and Experiment Reports to source, shortlist, and test ideas using time-boxed LEAN Sprints.